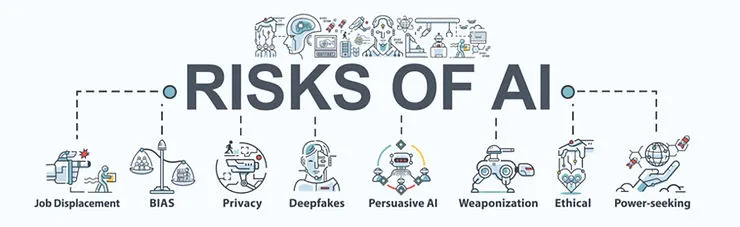

Artificial intelligence (AI) is rapidly transforming research across all organisations in Australia. By processing massive datasets, AI tools uncover hidden trends and generate insights that would take humans a lifetime to complete. However, with great power comes great responsibility. Harnessing this AI energy requires robust security measures to safeguard sensitive information. This article will explore how AI is revolutionising research while addressing potential risks and the essential security controls organisations must implement.

In the realm of research, employees are the very lifeblood of discovery. They gather data, formulate insightful questions, and guide AI toward groundbreaking outcomes. The transformative power lies in AI's ability to learn from past experiences. By analysing the data and research queries meticulously provided by employees, AI can generate highly accurate results and anticipate future lines of inquiry.

Imagine an AI trained on decades of medical research data, predicting the spread of emerging diseases with remarkable precision. Similarly, a financial AI could identify signs of potential market bubbles before they erupt. These scenarios highlight AI's "learning loop," propelling research towards greater accuracy, efficiency, and groundbreaking discoveries.

The ease of access to AI tools presents unique challenges for organisations. User-friendly interfaces empower employees to readily upload data for swift analysis, boosting research efficiency. However, this convenience creates potential security and privacy risks with broader implications.

For example, a marketing department might upload a customer database containing names, addresses, and purchasing habits to analyse consumer trends. While this approach seems efficient, what controls are in place to protect this information? Accidental uploads without proper anonymisation could expose sensitive data within the organisation.

The concern extends beyond malicious intent; human error can also have severe consequences. Imagine a pharmaceutical department seeking insights on potential side effects of a new drug accidentally uploading a confidential drug formula. Such an incident could lead to financial losses, reputational damage, and legal repercussions.

This highlights the importance of striking a balance between user-friendly AI and robust data security controls within Australian organisations. By implementing appropriate safeguards, organisations can ensure that sensitive information remains protected, even within the collaborative environment fostered by AI tools.

To navigate the evolving landscape of accessible AI tools, Australian organisations must adapt their policies with a focus on robust data security. Here are some crucial elements:

By implementing these essential security protocols, Australian organisations can leverage the power of AI tools with confidence, ensuring a secure and productive work environment.

The National Institute of Standards and Technology (NIST) has developed a valuable framework, the AI Risk Management Framework (AI RMF), to help ensure sensitive information is handled responsibly when using AI tools.

AI tools often rely on vast amounts of data to learn and improve, including personal information, browsing habits, and even biometric data. While this data can personalise experiences, it also poses significant security risks. The NIST AI RMF is a voluntary framework organisations can use to manage the risks associated with AI systems. Here’s how it helps protect user privacy:

By following the NIST AI RMF principles, organisations can demonstrate their commitment to responsible data protection, fostering trust among users and stakeholders.

At Spartans Security, we serve as your reliable partner, enhancing your organisation’s utilisation of AI tools. Our seasoned security professionals specialise in establishing robust data governance frameworks designed for AI applications. We offer a comprehensive range of cybersecurity services to help Australian organisations, including:

While AI tools offer a powerful boost to research, wielding that power responsibly is paramount. By implementing robust security measures and regularly updating policies, Australian organisations can empower their employees to leverage the full potential of AI while safeguarding sensitive information. The future of Australian research lies in fostering intelligent collaboration between humans and AI, with trust and robust security as the foundation for success.

If you have any inquiries or questions, get in touch at info@spartanssec.com.